Table of Links

II. Overview of error Mitigation Methods

IV. Results and Discussions, and References

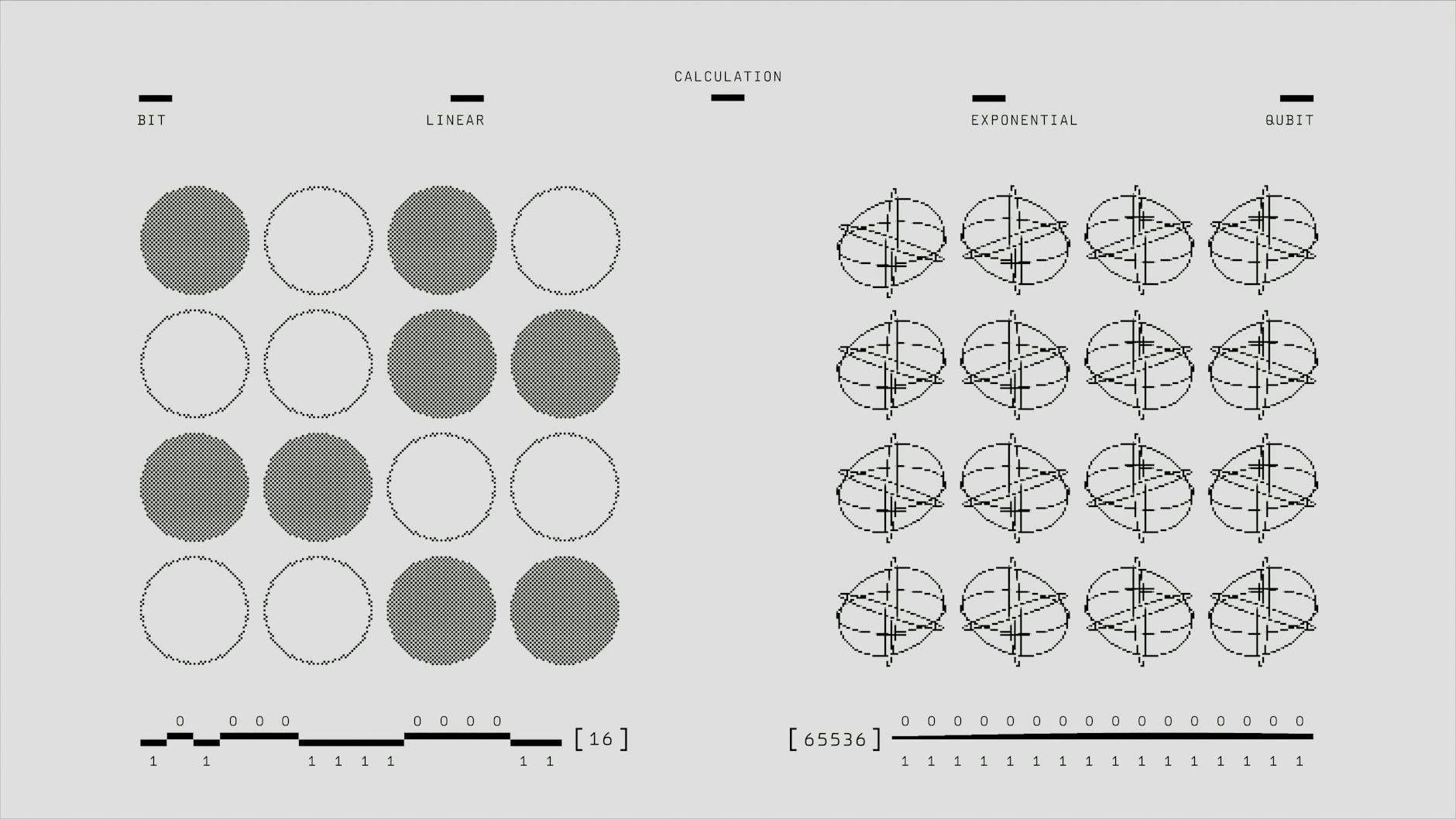

II. OVERVIEW OF ERROR MITIGATION METHODS

For completeness, we list and describe different class of error mitigation. Currently, the established methods for error mitigation lie in one of the five classes:

-

Zero Noise Extrapolation (ZNE);

-

Probabilistic Error Cancellation (PEC);

-

Pauli Twirling;

-

Measurement Error Mitigation; and,

-

Machine Learning techniques.

Each of the error mitigation classes have their respective advantages and disadvantages, ranging from ease of implementation and minimal number of assumptions, and increased time to run an algorithm and exponential increase of gates to circuits. Below the advantage and shortcomings of each class is given.

a. ZNE: ZNE works with unknown noise models, and it can be used with different algorithms, including variational methods. In addition, it can be applied through digital or analog modes. However, assuming the noise model is time invariant, ZNE needs to run the experiment several times. Moreover, ZNE can be sensitive

![FIG. 1. Circuit to test the technique in Certo et al. [1]. Here the circuit depth of the ansatz Two-Local circuit was doubled, resulting in three layers of Ry gates and two la yer of one-to-all control-Z gates in-between the Ry layers](https://cdn.hackernoon.com/images/fWZa4tUiBGemnqQfBGgCPf9594N2-3e8347b.png)

to the methods used to amplify noise and needs to be used with other error mitigation methods since it won’t be able to correct for error from state preparation and measurement (SPAM) [6, 8–10].

b. PEC: PEC can accurately correct for noise for an arbitrary number of qubits and is especially helpful for local dephasing noise and crosstalk errors. Given these advantages, PEC sampling overhead scales exponentially with error rates and circuit depth. Application may be limited to noise cancellation in simulating open quantum dynamics [11–14].

d. Measurement Error Mitigation A general assumption about the noise, with some restrictions; only the expectation is analyzed, making the technique purely classical; there are different implementations including the assumption of independence, modeling the assignment matrix with a continuous time Markov process, solving via constrained optimization, or using classical neural network to parse the data and separate the noise. But, overhead increases exponentially with the circuit size or number of qubits, has added steps increase time to solution, and some methods require a trained classical model [20–26].

e. Machine Learning Techniques The techniques leverage neural network architecture to mitigate the noise, using a minimal number of assumptions about the noise. Furthermore, pretrained networks allow for transfer learning to new circuits, considerably decreasing time to retrain the network. Autoencoders tend to play a prominent role in this technique. However, machine learning adds at least polynomial growth to the length of the circuit through the extra gates added to the circuit, and is a variational model that requires data and time to train. Finally, statistical models have a large potential to be undertrained and biased [27–29].

Authors:

(1) Anh Pham, Deloitte Consulting LLP;

(2) Andrew Vlasic, Deloitte Consulting LLP.

This paper is